UChicago Computer Scientists Bring in Generative Neural Networks to Stop Real-Time Video From Lagging

In a world where remote work and virtual communication is moving to the forefront of our lives, there are few things more frustrating than when we begin to lose connection. Video quality disintegrates, audio becomes unrecognizable, and sometimes we are left with no choice but to drop out of a meeting and retry again. It’s a problem that has evaded a quality solution for decades, but a team from the Department of Computer Science at the University of Chicago thinks they have found a promising solution.

How Real-Time Video Works

The breakdown occurs, especially in long-distance video, because part of the data is taking too long to move through the Internet. When data needs to be retransmitted through the Internet, it essentially blocks other received data from being decoded and creates the visual stuttering of content we have all grown to dislike.

The breakdown occurs, especially in long-distance video, because part of the data is taking too long to move through the Internet. When data needs to be retransmitted through the Internet, it essentially blocks other received data from being decoded and creates the visual stuttering of content we have all grown to dislike.

In order to work correctly, live streaming video such as online meetings and cloud gaming turn packets of data into multiple frames that function like a flip book. Frames need to transmit in consecutive order without any of the picture going missing so that the receiver can make sense of what the sender is trying to convey.

The Current Problem

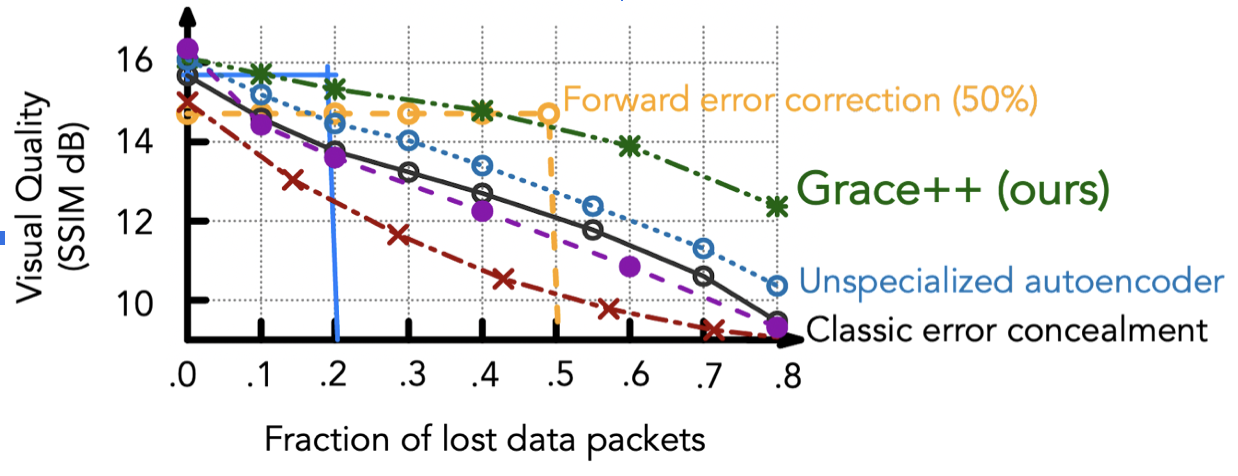

Unfortunately when network quality becomes poor, any frames can be affected by data loss. Real-time video clients have had to gamble on which frames need to be protected by redundant coding so that users don’t experience the ever-so-common pixelation of content. As with any gamble, results may vary.

“The key challenge in video streaming is how to tolerate data packet loss on a network,” said Assistant Professor of Computer Science Junchen Jiang. “If you are doing real time video streaming, the content cannot be pre-encoded like it is for Netflix or a YouTube video. There is no buffer on your side to conceal any jitter or data retransmission in the network. So there is a fundamental trade-off here: you can either have good quality, or you can tolerate delay or loss in the network.”

A Viable Solution

The idea arose two years ago when second-year Ph.D. student Yihua Cheng and then Master student Anton Arapin were studying computer vision literature and came across a tool, called autoencoder, that used neural networks to encode and recreate pictures. Because it is generative, when the encoded data is corrupted, it can still recreate realistic looking pictures. The idea was sparked that this tool could potentially be repurposed to enable real-time streaming without being bothered by network loss.

Over the past two years, Cheng and the team of UChicago scientists have been pulling inspiration from this tool to develop Grace++; a patent-pending, real-time video communication system that uses an autoencoder to encode and decode frames using specially trained neural networks and a custom frame delivery protocol. When a subset of data goes missing in translation, Grace++ fills in the missing piece by essentially masking the hole with generic data so the entire storyline doesn’t get backed up and fall apart.

“Let’s say when I send my video to you in real time and some data is lost in the transmission, it’s going to chop some data,” explained Jiang, who oversaw the research. “That missing data could then be generated by the neural network on your site instead.”

Limitations

One of the biggest limitations with this type of system is that it requires an expensive graphic processing unit (GPU) specially designed to run a neural network. Although it is becoming more common, GPUs are not usually available on a cellphone or resource-constrained devices. The team is trying to mitigate this limitation by shrinking the size of the neural network so it can run on a wider range of devices. The system would also need an additional player to be installed on the computer before it could work, which requires more than a couple lines of code. The team is trying to integrate it into a browser, which would essentially allow someone to install it as a plugin.

Grace++ is in the process of being patented through the University of Chicago’s Polsky Center for Entrepreneurship and Innovation. Two archived versions of the research are available, and a peer-reviewed version is currently going through submission. The team is also partnering with NVIDIA, a world leader in artificial intelligence computing, to create a follow-up version of the system.