Rethinking AI as a Thought Partner: Perspectives on Writing, Programming, and More

Writing has always been a deeply human endeavor, reflecting our thoughts, beliefs, and creative processes. However, the incorporation of artificial intelligence (AI) as a writing tool has challenged traditional understandings of authorship, cognition, and collaboration. In a recent perspective study), Assistant Professor Mina Lee from the Department of Computer Science at the University of Chicago and her collaborators at the University of Cambridge, Princeton University, New York University, The Alan Turing Institute, and Massachusetts Institute of Technology, explored the concept of AI as a “thought partner” rather than a tool.

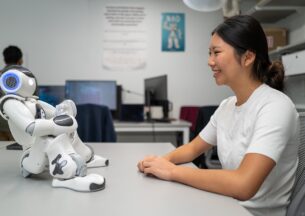

The study presents a novel way to envision human-AI interaction, particularly in domains like storytelling, programming, medicine, and embodied assistance. The study emphasizes how AI could be designed explicitly to complement human reasoning, adapting dynamically to support users in more personalized and effective ways.

At the heart of this perspective is the Bayesian framework, a method that models human reasoning by starting with an initial belief (a prior) and refining it through new observations or data. Unlike traditional language models that rely on vast data to generalize behavior, Bayesian models explicitly account for uncertainty and variability in human thought. This allows AI systems to adjust to an individual user’s needs over time, learning from their behaviors, preferences, and constraints. For example, a Bayesian AI writing assistant could potentially rapidly adapt its suggestions based on whether the user is brainstorming, drafting, or revising their text, offering tailored support that aligns with their stage in the creative process and possibly do so in a more interpretable form. This contrasts with current systems, which often have a one-size-fits-all heuristic to decide when and how to provide suggestions, such as auto-completions that may disrupt rather than enhance the user experience and may lack interpretability and principled expression of uncertainty.

“We put forward an argument for what a good AI thought partner should have,” Lee stated. “This involves the three desiderata in the paper, which outlines that 1) the model should be able to understand us, 2) we can understand the model, and 3) we should have a shared understanding of the world. The Bayesian framework provides a nice method for us to do that systematically.”

Unlike tools, which assist with specific tasks in static and passive ways, humans engage with their partners dynamically and proactively, promoting collaborative modes of thinking. Drawing inspiration from human-to-human collaboration, the team identified key modes of collaborative thoughts that an AI thought partner could facilitate, such as sensemaking, deliberation, and ideation. These activities require AI systems to not only understand human reasoning but also make their own reasoning interpretable to users. Achieving this shared understanding is essential for fostering trust and efficiency in human-AI partnerships.

Lee and her collaborators introduced practical applications of this perspective across multiple domains. In programming, an AI thought partner could model a coder’s buggy mental model of the programming environment and interactively help to ‘patch’ bugs in their understanding. In storytelling, such a system could adapt its support to the writer’s needs while explicitly modeling the audience’s experience, refining its suggestions as the narrative evolves. Similarly, in the medical field, an AI thought partner could assist clinicians by accounting for their time constraints and diagnostic habits, selectively suggesting actions that enhance decision-making without overwhelming them with redundant information.

“We have limited time, limited memory, limited knowledge, and within those limited resources, we try to make rational decisions,” Lee stated.

“But it may not be optimal, right? That’s where the Bayesian framework shines,” Lee continued. “It allows AI systems to recognize and work within these human limitations, offering support that complements rather than competes with our cognitive processes. By modeling uncertainty and adapting iteratively, these systems can provide just-in-time assistance that aligns with how humans actually think and work. For example, consider a writer crafting a short story: a current AI system might suggest generic plot twists or character traits based on patterns in its training data, often interrupting the writer’s flow or proposing ideas that don’t fit the narrative’s tone. In contrast, a Bayesian AI thought partner could first infer the writer’s intent—whether they are exploring possibilities, refining a plotline, or polishing language—and adapt its suggestions accordingly. It might help brainstorm unconventional twists during the ideation phase, ask clarifying questions when the narrative is ambiguous, or propose subtle linguistic refinements when the story nears completion. This nuanced support fosters creativity without disrupting the writer’s process.”

Despite the promising potential of AI thought partners, Lee acknowledged the challenges and ethical considerations of integrating these systems into everyday tasks. One critical area of exploration is how AI thought partners might shape user cognition and behavior. For instance, while a Bayesian AI system could support students by adapting its feedback to their unique learning styles or helping them tackle complex problems, it also raises concerns about overreliance.

“AI has the potential to augment human thinking, but it must do so without diminishing our capacity for creativity and critical thinking,” Lee emphasized.

This tension is particularly pronounced in educational settings, where some educators worry that AI systems might bypass essential skill development, while others see them as opportunities to foster innovation and efficiency. By designing systems that encourage synergistic collaboration, the researchers hope to strike a balance, ensuring that AI thought partners complement human cognition without replacing it. This approach highlights the importance of aligning AI design with broader societal goals, ensuring that these systems serve as tools for empowerment rather than dependency.

Looking forward, Lee and her students have many exciting projects planned to dive deeper into the intersection of writing and AI. One project focuses on investigating how AI will transform writing education and how to create a curriculum that incorporates AI tools. Another plan is to study how users’ capacity for creativity and critical thinking is impacted by AI.

To learn more about her projects, you can visit Lee’s research page here.